Hack2023 1st Prize: Difference between revisions

No edit summary |

No edit summary |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

<p class="left" style="font-size:26px;"> ←''[[ETSI_-_LF_-_OCP_-_MEC_Hackathon_2023|MEC Hackathon 2023]]''<p> | |||

<br> | |||

{{DISPLAYTITLE:<span style="position: absolute; clip: rect(1px 1px 1px 1px); clip: rect(1px, 1px, 1px, 1px);">{{FULLPAGENAME}}</span>}} | {{DISPLAYTITLE:<span style="position: absolute; clip: rect(1px 1px 1px 1px); clip: rect(1px, 1px, 1px, 1px);">{{FULLPAGENAME}}</span>}} | ||

| Line 31: | Line 33: | ||

<div class="panel-body"> | <div class="panel-body"> | ||

[[File: | [[File:Optare1.png|300px|center|top|class=img-responsive]] | ||

</div> | </div> | ||

<div class="panel-footer">From left to right, | <div class="panel-footer">From left to right, Santiago Rodriguez & Fernando Lamela</div> | ||

<!-- <div class="panel-footer">Footer text 1</div> --> | <!-- <div class="panel-footer">Footer text 1</div> --> | ||

</div><!-- End of pan --> | </div><!-- End of pan --> | ||

| Line 42: | Line 44: | ||

= Introduction = | = Introduction = | ||

<p> | <p> | ||

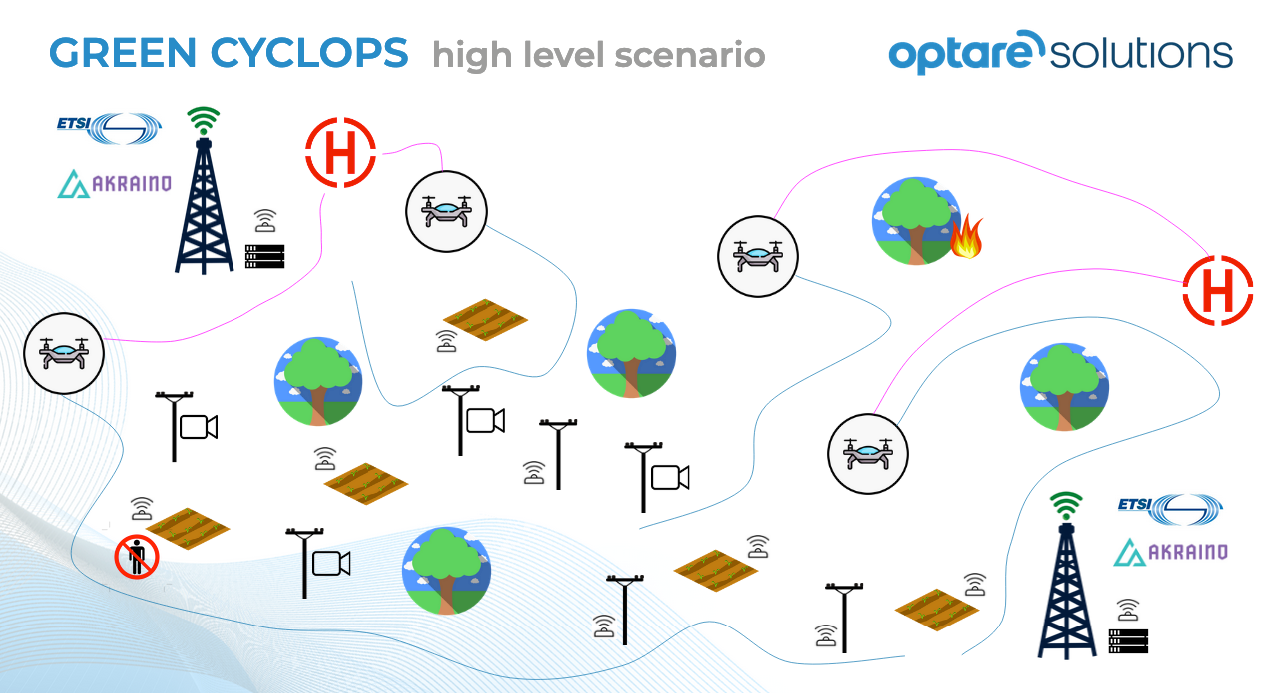

A computer vision platform or ecosystem that utilizes 5G, edge computing, and AI to handle | |||

multiple video stream sources from various devices such as drones, fixed cameras, and IoT | |||

sensors. </p> | |||

< | <p> | ||

This platform aims to enable the application of different AI detection and analytic | |||

models based on user selection. </p> | |||

<p> | |||

The inference process takes place at the edge, where computational resources are shared to execute AI models relevant to different scenarios, including forest fire and smoke detection, wildlife control, and deforestation. | |||

</p> | </p> | ||

= | [[File:Green-cyclops.png|800px|center|top|class=img-responsive]] | ||

<br> | <br> | ||

The key components and functionalities of this computer vision platform can be described as | |||

< | follows: | ||

<p> | |||

< | • Video Stream Management: The platform receives and manages multiple video | ||

streams and information from diverse sources, such as drones, fixed cameras, and IoT | |||

sensors. These streams are transmitted via the high-speed and low-latency capabilities | |||

of a 5G network. | |||

</p> | |||

<p> | |||

• IoT Events Management: The platform receives and manages multiple events from | |||

devices placed on the ground to add information to the use cases in the described | |||

scenario. </p> | |||

<p> | |||

• Edge Computing: The platform leverages edge computing infrastructure, which brings | |||

computational resources closer to the video sources. This allows for real-time analysis | |||

and decision-making at the network edge, reducing the need for data transmission to a | |||

central server or cloud. Edge computing facilitates faster response times and more | |||

efficient resource utilization.</p> | |||

<p> | |||

• AI Detection and Analytic Models: The platform supports the selection and deployment | |||

of different AI detection and analytic models based on specific requirements. For | |||

example, models can be chosen for forest fire and smoke detection, wildlife monitoring, | |||

or deforestation analysis. These models utilize computer vision techniques, such as | |||

object detection, image classification, and semantic segmentation, to extract | |||

meaningful information from the video streams.</p> | |||

<p> | |||

• Model Inference at the Edge: The selected AI models are executed at the edge | |||

computing nodes, where the necessary computational resources are available. This | |||

eliminates the need to transmit large volumes of video data to a centralized server or | |||

cloud for inference. By performing inference at the edge, the platform achieves | |||

real-time analysis and enables quick response to detected events or anomalies.</p> | |||

<p> | |||

• Resource Sharing: allowing for the simultaneous execution of multiple AI models on | |||

different video streams. This flexibility enables efficient resource allocation and | |||

scalability to handle varying workloads.</p> | |||

<p> | |||

• Scenario-Specific Applications: The platform is adaptable and customizable to address | |||

a wide range of use cases.</p> | |||

<p> | |||

• Cloud Management Applications: The platform delivers cloud components for high level | |||

supervision and management purposes, as well as use case configuration to be | |||

deployed over the edge nodes.</p> | |||

<p> | |||

• Sustainable applications: All the AI applications will be measured in terms of energy | |||

consumption making smart decisions on how to run trying to make a sustainable | |||

context</p> | |||

<p> | |||

• The offloading of the AI to the edge makes more efficient the drone consumption | |||

helping in the autonomy of the UAVs | |||

</p> | |||

<br><br> | <br><br> | ||

| Line 98: | Line 115: | ||

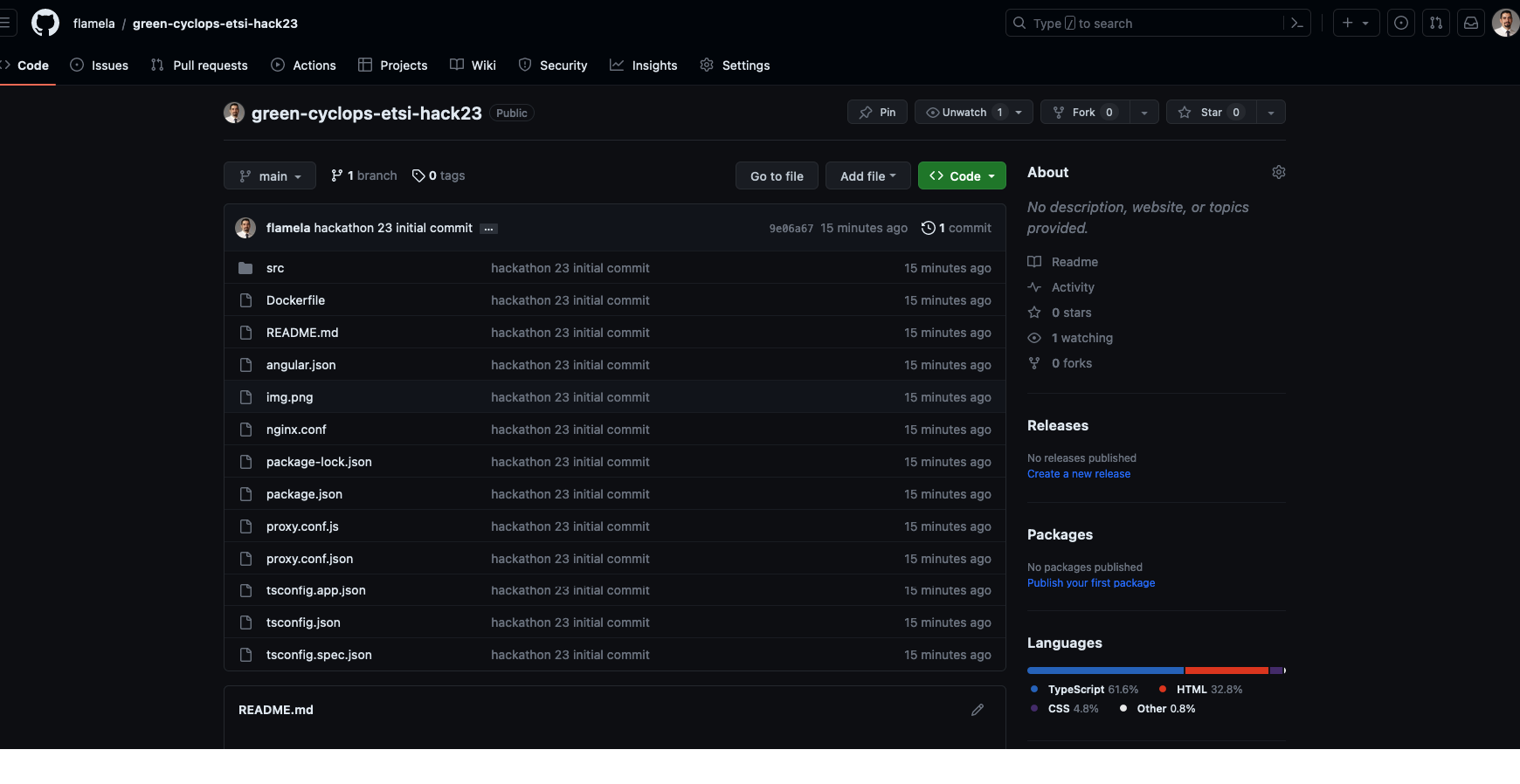

• '''Project repository''' | • '''Project repository''' | ||

https://github.com/flamela/ | https://github.com/flamela/green-cyclops-etsi-hack23 | ||

Web App for Managing green cyclops Edge app and model ecosystem as well as dashboard representations. | |||

Need to be complemented with backend API not availbale in this repo, that is only uploaded to be used as inspiration or reusability, for another teams. | |||

[[File:Github-optare.png|600px|center|class=img-responsive]] | |||

= Project Videos = | |||

{{#evu:https://www.youtube.com/watch?v=zmEYpDqo0FU | |||

|alignment=inline | |||

|dimensions="120" | |||

}} | |||

Latest revision as of 09:04, 28 March 2024

1st Prize Award

Managing natural resources using AI, Edge Computing and Advanced Communication

Team

Team Green Cyclops from Optare Solutions

- Xose Ramon Sousa Vazquez

- Santiago Rodriguez Garcia

- Fernando Lamela Nieto

Introduction

A computer vision platform or ecosystem that utilizes 5G, edge computing, and AI to handle multiple video stream sources from various devices such as drones, fixed cameras, and IoT sensors.

This platform aims to enable the application of different AI detection and analytic models based on user selection.

The inference process takes place at the edge, where computational resources are shared to execute AI models relevant to different scenarios, including forest fire and smoke detection, wildlife control, and deforestation.

The key components and functionalities of this computer vision platform can be described as

follows:

• Video Stream Management: The platform receives and manages multiple video streams and information from diverse sources, such as drones, fixed cameras, and IoT sensors. These streams are transmitted via the high-speed and low-latency capabilities of a 5G network.

• IoT Events Management: The platform receives and manages multiple events from devices placed on the ground to add information to the use cases in the described scenario.

• Edge Computing: The platform leverages edge computing infrastructure, which brings computational resources closer to the video sources. This allows for real-time analysis and decision-making at the network edge, reducing the need for data transmission to a central server or cloud. Edge computing facilitates faster response times and more efficient resource utilization.

• AI Detection and Analytic Models: The platform supports the selection and deployment of different AI detection and analytic models based on specific requirements. For example, models can be chosen for forest fire and smoke detection, wildlife monitoring, or deforestation analysis. These models utilize computer vision techniques, such as object detection, image classification, and semantic segmentation, to extract meaningful information from the video streams.

• Model Inference at the Edge: The selected AI models are executed at the edge computing nodes, where the necessary computational resources are available. This eliminates the need to transmit large volumes of video data to a centralized server or cloud for inference. By performing inference at the edge, the platform achieves real-time analysis and enables quick response to detected events or anomalies.

• Resource Sharing: allowing for the simultaneous execution of multiple AI models on different video streams. This flexibility enables efficient resource allocation and scalability to handle varying workloads.

• Scenario-Specific Applications: The platform is adaptable and customizable to address a wide range of use cases.

• Cloud Management Applications: The platform delivers cloud components for high level supervision and management purposes, as well as use case configuration to be deployed over the edge nodes.

• Sustainable applications: All the AI applications will be measured in terms of energy consumption making smart decisions on how to run trying to make a sustainable context

• The offloading of the AI to the edge makes more efficient the drone consumption helping in the autonomy of the UAVs

Software resources

• Project repository

https://github.com/flamela/green-cyclops-etsi-hack23

Web App for Managing green cyclops Edge app and model ecosystem as well as dashboard representations.

Need to be complemented with backend API not availbale in this repo, that is only uploaded to be used as inspiration or reusability, for another teams.