Hack2023 2nd Prize: Difference between revisions

(Created page with "{{DISPLAYTITLE:<span style="position: absolute; clip: rect(1px 1px 1px 1px); clip: rect(1px, 1px, 1px, 1px);">{{FULLPAGENAME}}</span>}} <p class="center" style="font-size:34px;"><b>1<sup>st</sup> Prize Award</b><p> <p class="center" style="font-size:34px;"><b> Managing natural resources using AI, Edge Computing and Advanced Communication </b><p> <br> = Team= <div class="flex-row row"> <div class="col-xs-5 "> <div class="panel panel-default"> <div class="panel-bo...") |

No edit summary |

||

| Line 1: | Line 1: | ||

{{DISPLAYTITLE:<span style="position: absolute; clip: rect(1px 1px 1px 1px); clip: rect(1px, 1px, 1px, 1px);">{{FULLPAGENAME}}</span>}} | {{DISPLAYTITLE:<span style="position: absolute; clip: rect(1px 1px 1px 1px); clip: rect(1px, 1px, 1px, 1px);">{{FULLPAGENAME}}</span>}} | ||

<p class="center" style="font-size:34px;"><b> | <p class="center" style="font-size:34px;"><b>2<sup>nd</sup> Prize Award</b></p> | ||

<p class="center" style="font-size:34px;"><b> | <p class="center" style="font-size:34px;"><b> Project Sheikah Tower </b></p> | ||

<p class="center" style="font-size:25px;"> AI Assistants powered by local people </p> | |||

<p class="center" style="font-size:25px;">Enhance usefulness based on time and place </p> | |||

<br> | <br> | ||

= Team= | = Team= | ||

<div class="flex-row row"> | <div class="flex-row row"> | ||

<div class="col-xs- | <div class="col-xs-12 col-md-6 col-lg-7"> | ||

<div class="panel panel-default"> | <div class="panel panel-default"> | ||

<div class="panel-body"> | <div class="panel-body"> | ||

Team '''Sheikah Tower''' from '''Google LLC''' and '''Peking University''' | Team '''Project Sheikah Tower''' from '''Google LLC''' and '''Peking University''' | ||

* Qi Tang - Senior Hardware Engineer - Google | * Qi Tang - Senior Hardware Engineer - Google | ||

* Yi Han - Google | * Yi Han - Google | ||

* Sharu Jiang - Peking University | * Sharu Jiang - Peking University | ||

<br> | |||

[[File:Sheikah4.png|400px|class=img-responsive]] | |||

</div> | </div> | ||

<!-- <div class="panel-footer">Footer text 1</div> --> | <!-- <div class="panel-footer">Footer text 1</div> --> | ||

| Line 29: | Line 29: | ||

</div><!-- End of col 1--> | </div><!-- End of col 1--> | ||

<div class="col-xs- | <div class="col-xs-12 col-md-6 col-lg-5"> | ||

<div class="panel panel-default"> | <div class="panel panel-default"> | ||

<div class="panel-body"> | <div class="panel-body"> | ||

[[File: | [[File:Sheikah5.png|300px|center|top|class=img-responsive]] | ||

</div> | </div> | ||

<div class="panel-footer">From | <!-- | ||

<div class="panel-footer">From Left to right: </div> | |||

--> | |||

<!-- <div class="panel-footer">Footer text 1</div> --> | <!-- <div class="panel-footer">Footer text 1</div> --> | ||

</div><!-- End of pan --> | </div><!-- End of pan --> | ||

| Line 42: | Line 44: | ||

</div><!-- end of row 1--> | </div><!-- end of row 1--> | ||

[[File:Sheikak2.png|700px|center|top|class=img-responsive]] | |||

</div> | |||

<br> | |||

= Introduction = | = Introduction = | ||

<p> | <p> | ||

Edge Native Real-time Voice AI Assistant provides local-info enhanced language and speech services (e.g. real-time translation and AI voice-bot) using the state-of-the-art AI models. The key features are the real-time streaming services by edge computing. We will also leverage the ETSI MEC APIs to fine-tune or prompt the LLM with available local information (such as dialects, geographics, local culture) to provide faster and more useful user contents. We will demonstrate the advantages of edge computing compared to traditional on-device and cloud based services. The end user interfaces can be mobile devices, wearables, IoTs, robots and/or vehicles. | Edge Native Real-time Voice AI Assistant provides local-info enhanced language and speech services (e.g. real-time translation and AI voice-bot) using the state-of-the-art AI models. </p> | ||

Main Features: | <p> | ||

● Users can easily find the nearby virtual assistants from a map view by leveraging MEC APIs | The key features are the real-time streaming services by edge computing. We will also leverage the ETSI MEC APIs to fine-tune or prompt the LLM with available local information (such as dialects, geographics, local culture) to provide faster and more useful user contents. We will demonstrate the advantages of edge computing compared to traditional on-device and cloud based services. The end user interfaces can be mobile devices, wearables, IoTs, robots and/or vehicles.</p> | ||

● Local AI virtual assistant indexed by ZoneID and CellID | |||

● “Local” means the Vector Database and Prompts are location dependent | <p>Main Features: </p> | ||

● The Vector database and Prompts are uploaded and designed by the local business owners; | |||

● The virtual assistants can be sophisticated / the-state-of-art AI models serving as a real-time language interpreter (For example, Meta’s latest Speech-to-Speech Massive Language models) which also can be found by the user from the map (as long as it is within the same ZoneID or CellID) | <p>● Users can easily find the nearby virtual assistants from a map view by leveraging MEC APIs</p> | ||

● The finding range can be also flexible: for example, indoor localization information from MEC APIs which is used serving for a museum exhibit tour (room specific) or a city tour based on the user’s device GPS signal | <p>● Local AI virtual assistant indexed by ZoneID and CellID </p> | ||

● The app is agnostic to various user end devices because computation, memory and location information is not on device per se. We choose iOS for demonstration purpose only. | <p>● “Local” means the Vector Database and Prompts are location dependent</p> | ||

<p>● The Vector database and Prompts are uploaded and designed by the local business owners; </p> | |||

<p>● The virtual assistants can be sophisticated / the-state-of-art AI models serving as a real-time language interpreter (For example, Meta’s latest Speech-to-Speech Massive Language models) which also can be found by the user from the map (as long as it is within the same ZoneID or CellID)</p> | |||

<p>● The finding range can be also flexible: for example, indoor localization information from MEC APIs which is used serving for a museum exhibit tour (room specific) or a city tour based on the user’s device GPS signal</p> | |||

<p>● The app is agnostic to various user end devices because computation, memory and location information is not on device per se. We choose iOS for demonstration purpose only.</p> | |||

[[File:Sheikah-archi.png| | |||

[[File:Sheikah-archi.png|600px|center|top|class=img-responsive]] | |||

[[File:Sheikah-map.gif|300px|center|top|class=img-responsive]] | |||

<br> | <br> | ||

<!-- | |||

<div class="flex-row row"> | |||

<div class="col-xs-12 col-md-6 col-lg-7"> | |||

<div class="panel panel-default"> | |||

<div class="panel-body"> | |||

[[File:Sheikah-archi.png|500px|center|top|class=img-responsive]] | |||

</div> | |||

</div><!-- End of pan --> | |||

</div><!-- End of col 1--> | |||

<div class="col-xs-12 col-md-6 col-lg-5"> | |||

<div class="panel panel-default"> | |||

<div class="panel-body"> | |||

[[File:Sheikah-map.gif|200px|center|top|class=img-responsive]] | |||

</div> | |||

</div><!-- End of pan --> | |||

</div><!-- end of col 2--> | |||

</div><!-- end of row 1--> | |||

--> | |||

<br><br> | <br><br> | ||

Revision as of 16:13, 11 December 2023

2nd Prize Award

Project Sheikah Tower

AI Assistants powered by local people

Enhance usefulness based on time and place

Team

Introduction

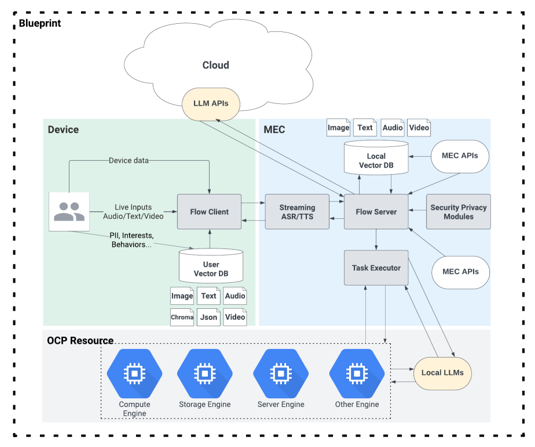

Edge Native Real-time Voice AI Assistant provides local-info enhanced language and speech services (e.g. real-time translation and AI voice-bot) using the state-of-the-art AI models.

The key features are the real-time streaming services by edge computing. We will also leverage the ETSI MEC APIs to fine-tune or prompt the LLM with available local information (such as dialects, geographics, local culture) to provide faster and more useful user contents. We will demonstrate the advantages of edge computing compared to traditional on-device and cloud based services. The end user interfaces can be mobile devices, wearables, IoTs, robots and/or vehicles.

Main Features:

● Users can easily find the nearby virtual assistants from a map view by leveraging MEC APIs

● Local AI virtual assistant indexed by ZoneID and CellID

● “Local” means the Vector Database and Prompts are location dependent

● The Vector database and Prompts are uploaded and designed by the local business owners;

● The virtual assistants can be sophisticated / the-state-of-art AI models serving as a real-time language interpreter (For example, Meta’s latest Speech-to-Speech Massive Language models) which also can be found by the user from the map (as long as it is within the same ZoneID or CellID)

● The finding range can be also flexible: for example, indoor localization information from MEC APIs which is used serving for a museum exhibit tour (room specific) or a city tour based on the user’s device GPS signal

● The app is agnostic to various user end devices because computation, memory and location information is not on device per se. We choose iOS for demonstration purpose only.

-->

Software resources

• Project repository

https://github.com/Dako2/sheikah-tower.git